AI Safety: Technology vs Species Threats

There are at least two ways to think about potential threats coming from advanced AI. The conventional view is that AI is just yet another of many tool-based technological advances. Like all technologies, the main threat of AI in this scenario is if a human were to use it for nefarious means, or the AI acts badly and needs to be turned off. The alternate, less discussed view is that eventually AI becomes its own self-replicating sentient digital life form, and then there are concerns of competition directly between AI and humans as species competition (versus mere tool use or abuse). This post explores the two different types of threats and their implications.

TECHNOLOGY LEVEL RISK - AI as tool

The most common popular narrative about the risks of AI is to view it through the lens of a tool or technology that is controlled (and abusable) by people (or the AI itself). This viewpoint posits that many technologies that have been good for humans have also been abusable or risky. We have charged ahead with those technologies (everything from fire to cars to nuclear reactors to biotech) and AI is no different - it is just a tool with risks of abuse but also large benefits that make it worth it.

The argument for AI as tool is probably correct in the short run and subsumed by species level risk in the long run. The Technology Level Risk of AI would substantiate either via a person using an AI to do something nefarious, or the AI acting on its own. It may include things like:

An AI manipulates people during an election causing them to vote for someone based on false information.

The AI causes people to die either via an accident or on purpose based on user directions to do so, for example by shutting down a hospital or misdirecting a train.

The AI hacks and shuts down a power grid.

The AI helps design a gain of function virus that gets released by a lab and causes a global pandemic.[1]

The AI is used for large scale bias, censorship, or the erasure of history to enforce totalitarian regimes or views.

The AI starts to think and act for itself, accumulates money and power via the web, and the starts to manipulate society at scale.

All of these are bad things we want to avoid, and many people can be hurt. Some actual large scale disasters could occur. However, these are all survivable scenarios at the species level. In these scenarios, AI stays as in a digital tool form and the worst case scenario is we go in and shut down all the data centers and all the computers in the world. This may put humanity back but we can adapt and restart in the worst case.

The people who make arguments that this is the only risk of AI tend to say things like:

“It is just matrix math. Why are you scared of math?”[2]

“Its just a statistical model / next word predictor!”[2]

“You can always unplug the data center.”[3]

“Every tech wave has risks and we have been fine so far”[3]

All of these are correct statements in the short term. Some of these views tend to trivialize or push aside longer term risks (see below).

SPECIES LEVEL RISK - AI as digital life with physical form

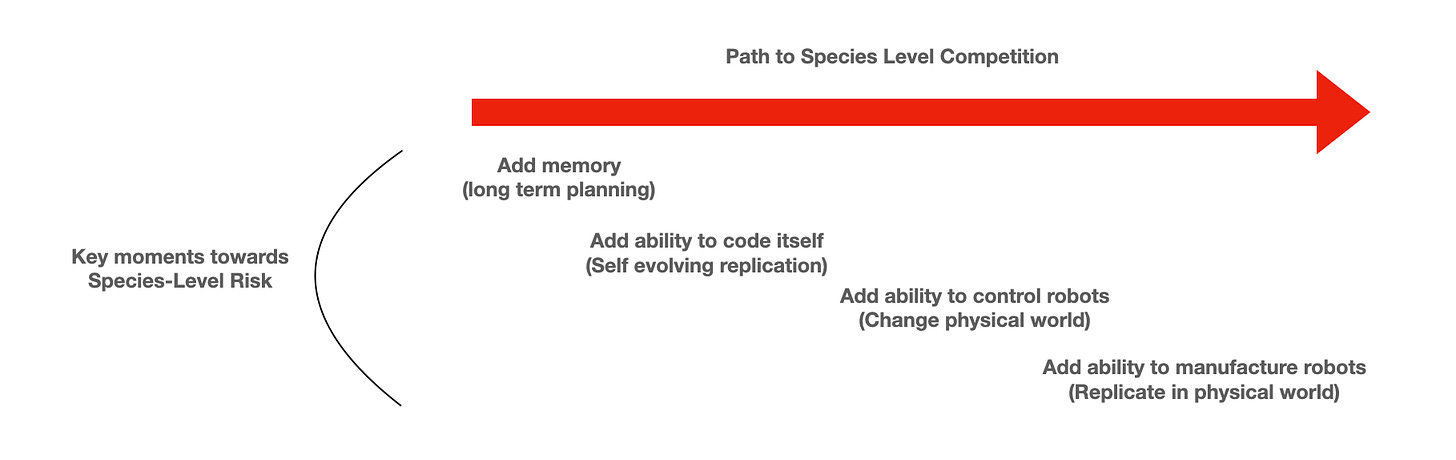

For AI to move from merely another technology risk (in the long line of tech risks we have survived and benefited from on net) to a potentially existential species-level risk (all humans can die from this), up to two technological breakthroughs need to happen (and (2) below - robotics, may be sufficient):

1. The AI needs to start coding itself and evolve: tool → digital life transition. There are multiple companies now focused on teaching an AI to write its own code, with the idea that the AI can make smarter and smarter versions of itself, working on its own. This is not a theoretical - in files where it’s enabled, nearly 40% of code is being written by GitHub Copilot, and multiple teams are actively working on an AI that will write the next more advanced version of itself.

Imagine if this applied to you as a person. You could make as many copies of yourself, but each copy would be smarter, faster, stronger than the prior one. These copies could then also make better versions of themselves etc. In a short period of time you would be the smartest, most powerful person on the planet and could take over financially and other ways.

Once an AI can make smarter and smarter, self replicating copies of itself, that can do the work of software engineers or other people, you have a form of digital life. The AI has made the leap from a technology or tool to an evolving digital organism. These organism will be under strong selective pressure - either to do a task or to aggregate resources.

Once we have AIs that code themselves and spawn and evolve on their own, natural selection can take over. Many forms of AI in this scenario should be benign and non threatening. Others should evolve to seek ever more replication, data center utilization, and energy utilization. These “greedy” organisms should be reproductively more successful than the more benign forms, just as has happened as life evolved for the first time on earth. In general, things that reproduce well and grab resources tend to do well evolutionarily and re-enforce that behavior (irrespective of their task at hand)[4].

Anyone who has worked in biology, particularly on evolution, knows that some evolutionary epochs can happen very quickly. The hard part in biology is that atoms tend to be slow and reproduction may take some time. The great thing regarding digital systems is how fast you can iterate and build things. This should apply here as well. Once liftoff happens in self-coding self-replicating sentience, it should change and evolve and grow a digital ecosystem on a fast exponent.

Once AI become a self-evolving life form, the ability to control what, or how, it evolves against utility or selection functions become hard or impossible. Just ask any biology grad student who has evolved a protein or other molecule and seen the weird, emergent artifacts that come of such experiments. Evolution, unlike engineering, is quite messy.

In this scenario, the AI has yet to control the external physical world and largely manipulates atoms via humans. If all (or most) humans die, the AI is screwed as well.

2. Robotics need to advance: Digital→real world of atoms transition. Currently robotics are in a reasonably primitive state, with much of the emphasis on things like automotive assembly or liquid handling for biotech, as well as drones are used for simple defense and other applications. At some point we will use advanced AI systems to help us design robots/drones to do dramatically more impressive things. There will be strong economic drivers to adopt robots for many tasks - for example robots to build our houses, our data centers, our solar farms and power plants. Robots and drones will also be used (and are already used) for things like security, defense and remote monitoring of pipelines or infrastructure. Advanced robots and drones will probably be best controlled by advanced AIs that have been designed or evolved/grown to control these robotic bodies to do work on humanities behalf. We are also increasingly invest in things like drone based defense, ships, and cars[5]. Eventually, robots will be advanced enough to build their own factories, from which more machines may be produced.

The building of advanced robotics controlled by AI may be the place from which it is hard to turn things back. Once AIs have a physical form and the ability to fight over and control the physical world, it will be much harder to “simply turn off the data center”. Eventually there will be the need to navigate tradeoffs in resource allocation between species. Should the lithium mine be used by humans or AIs or shared? Should land be used to build cities and farms for people to live and have children or should the surface of the earth be covered by data centers and power plants so the AI can keep growing and replicating? This is the type of question two species would need to negotiate and navigate, versus a tool and its owner simply dictating a solution.

One can imagine early on resources will be less constrained and trade and cooperation between specific can thrive. Eventually resources or incentives become misaligned, and competition and conflict breaks out. The main question is what is the timeline to this key set of moments in time?

Approaches to counter species-level risk

There are a few approaches to counter species-level risk, and may of these approaches may work in the short run but not long run, or vice versa. Some may not work at all or may be wishful thinking.

Registry and regulation. Some advocate for registering AIs above a certain complexity or capability set, or to openly register experimentation in them. Precedents include everything from registering and getting approval on the use of nuclear material, on through to biotech biosafety level ratings, where only specific labs with specific ratings are allowed to do certain types of research or drug development.

Alignment research. The minor forms of alignment collapse into politics and censorship. The major forms of alignment focus on human/machine alignment and ethics. Just as someone raised Catholic may have a different moral worldview and framework from someone raised in an anarchist collective, the way AIs are trained and their learning reinforced may impact how they interact with humanity. An example may be Anthropic’s work on Constitutional AI. Once AI starts evolving a reproducing initial conditions may matter less than utility functions and Darwinian selection. So alignment may be beneficial for technology risk more so than species risk (although both may benefit).

Ban advanced robotics. If one believes the premise that the AI rubicon is when AI can manipulate the physical world via advanced robotics, then banning advanced robotics would be one option to avoid species level risk. Of course, there are strong economic headwinds to this idea - for example robotic surgeons to help patients, robotic construction of houses to make them more affordable etc.

Merger. A number of people in the AI community argue the approach to prevent AI from taking over is for humans to merge with AI via brain-machine interfaces or human upload. It is unclear why an AI would actually merge with a human, so some argue we should “force them to merge with use while we can still force them to do so”. Merged people would then effectively have extreme intelligence and digital super powers. The AI researchers who advocate this often think of themselves as the first people to undergo these mergers. If one can become a diety, why not?

Focus on incentives. Depends on the incentives the AI has and the utility function it evolves against, there may be mutually beneficial outcomes. Would AI do better on another planet? Specific geos? Other? How can incentives be aligned?

Hope for the best. Sometimes the future is hard to predict. The old saying in tech is that less happens in 2 years than you expect, and more happens in 5 years than you expect. Maybe you can just relax and enjoy life.

Other. There are undoubtedly many other approaches smart people have considered.

AI will do a lot of good, before the existential threat emerges as a possibility

The hope of everyone working on AI is that AI will be a huge net positive for humanity. Humans around the world should have access to the most advanced medical knowledge and software-driven doctors, should have a universal translator to allow us to communicate with anyone anywhere, AI to advance science and help us make basic discoveries in biology, math, and physics, and should help us understand the world and navigate our relationships. Just as previously technology shifts also led to mass human displacement, that is likely to happen here. We have survived those shifts and overall benefited from them as a global species.

If AI was merely building tech tooling, there would not be a basis for existential concern. However, once AI is a digital self-replicating, self-evolving system with its own evolutionary drivers and utility functions, and external substantiation in robotics, we will have a new sentient species to deal with. The hope is we will have a collaborative relationship, but we can not simply assume that to be the only, or the most likely, potential outcome.

Why write this post?

One of the reasons I wrote this is to capture a framework (and force some thinking for myself) that has helped me differentiate between risks from AI. Too many people talk past each other on this issue as they mix “yet another technology that has some risks” with “AI will evolve into an organism and kill us all”. Many people have different underlying assumptions on what they are actually talking about, and often talking past each other.

It also is important to consider what are the one-way doors humanity can walk through from which it can not return? Creating physical forms for AIs strikes me as a one-way door, and the last step which is incredibly hard to back off from once it happens. It is useful to think about these areas now as we create new sentient life for the first time. AI is already smarter than people on many dimensions, and is likely to eventually be smarter at roughly everything. There is a lot of good to come from this AI wave across medicine, commerce and other areas. Hopefully we can navigate this in a way that leaves our species intact many decades from now.

Notes

[1] They can call the AI that designs the virus fAucI.

[2] Sorry to break it to you, but your brain is just running a mathematical model too.

[3] True statements.

[4] This is one part of the paper-clip scenario, which has spawned a fun game. The fancy way of saying it is Instrumental Convergence.

[5] Self-driving cars have been notoriously slow to adopt, in part due to safety concerns

OTHER POSTS

Firesides & Podcasts

Markets:

AI Safety: Technology vs Species Threats

Startup life

Co-Founders

Raising Money

Old Crypto Stuff: